Topics

Latest

AI

Amazon

Image Credits:DeepMind

Apps

Biotech & Health

mood

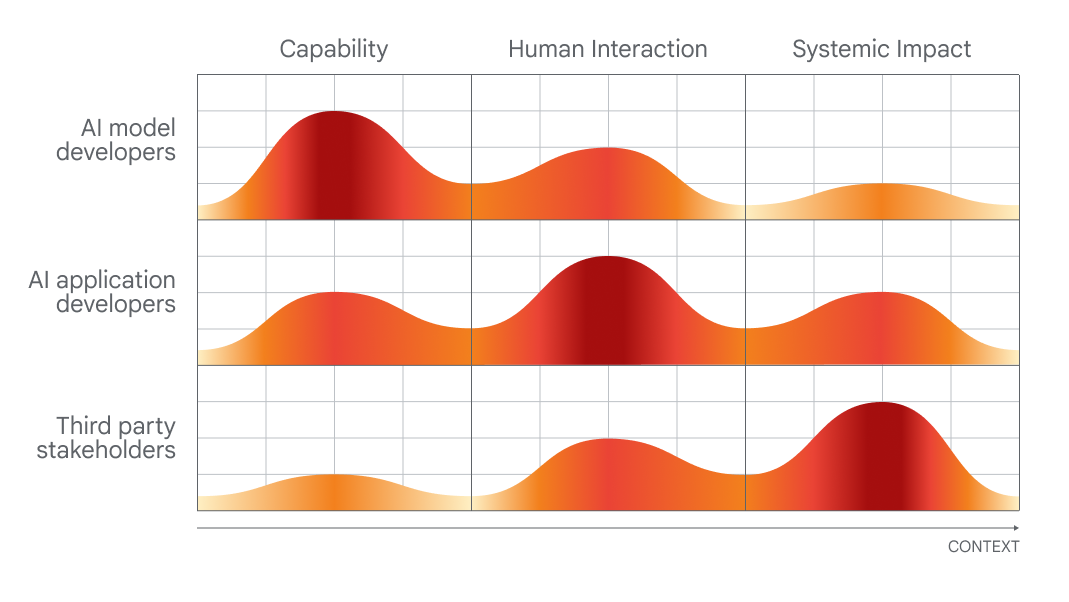

Chart showing which people would be best at evaluating which aspects of AI.Image Credits:Google DeepMind

Cloud Computing

Commerce

Crypto

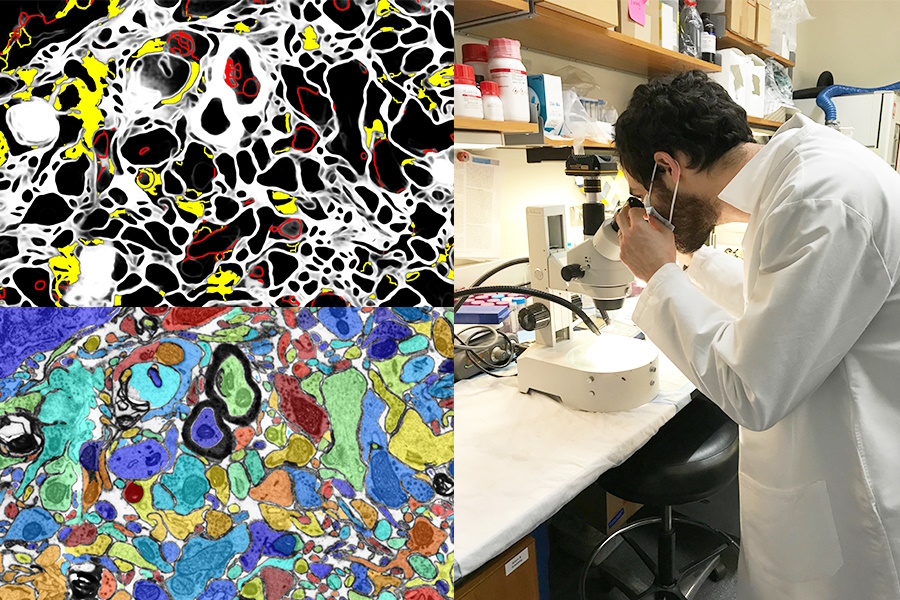

Image Credits:MIT/Harvard University

Enterprise

EVs

Fintech

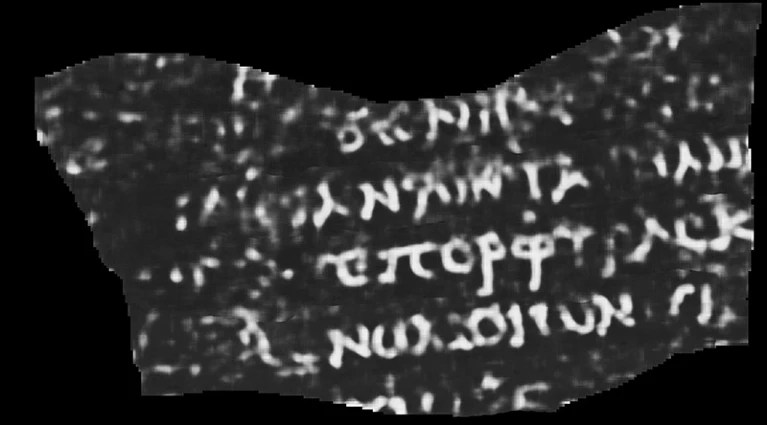

ML-interpreted CT scan of a burned, rolled-up papyrus. The visible word reads “Purple.”Image Credits:UK Photo

fund-raise

contrivance

Gaming

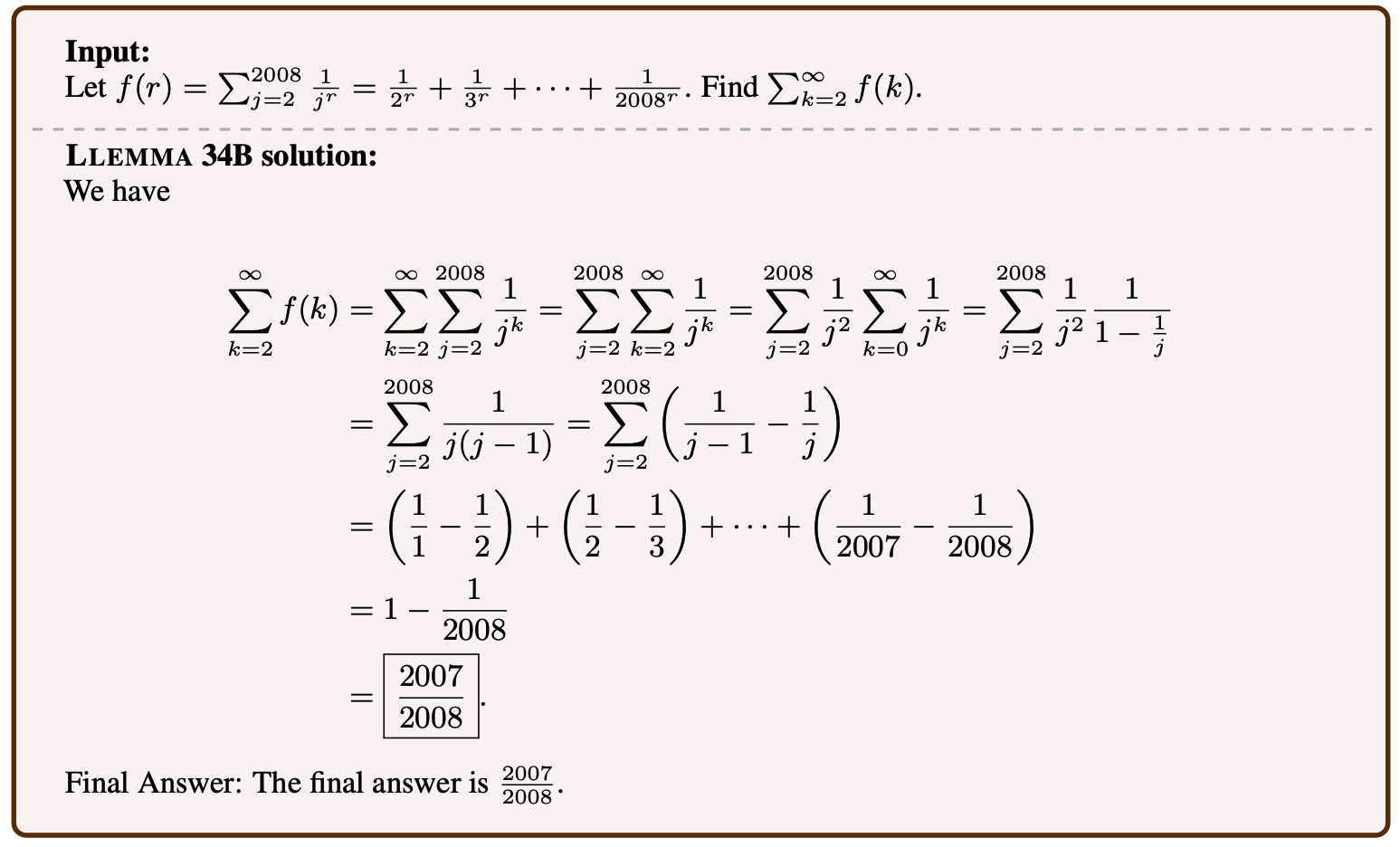

Example of a mathematical problem being solved by Llemma.Image Credits:Eleuther AI

Government & Policy

Hardware

Images shown to people, left, and generative AI guesses at what the person is perceiving, right.Image Credits:Meta

Layoffs

Media & Entertainment

Meta

Microsoft

Privacy

Robotics

Security

Social

Space

startup

TikTok

Transportation

Venture

More from TechCrunch

Events

Startup Battlefield

StrictlyVC

newssheet

Podcasts

picture

Partner Content

TechCrunch Brand Studio

Crunchboard

Contact Us

Keeping up with an industry as tight - moving asAIis a tall society . So until an AI can do it for you , here ’s a ready to hand roundup of recent stories in the world of machine learnedness , along with notable research and experiments we did n’t cover on their own .

This calendar week in AI , DeepMind , the Google - owned AI R&D lab , released apaperproposing a fabric for assess the societal and honorable risk of exposure of AI systems .

The timing of the paper — which calls for varying levels of amour from AI developers , app developers and “ broader public stakeholders ” in evaluating and auditing AI — is n’t inadvertent .

DeepMind is airing its perspective , very visibly , onwards of on - the - ground policy talk at the two - day superlative . And , to give credit where it ’s due , the research lab makes a few reasonable ( if obvious ) point , such as calling for approaches to examine AI system at the “ pointedness of human interaction ” and the elbow room in which these systems might be used and engraft in gild .

But in weighing DeepMind ’s proposition , it ’s illuminating to look at how the laboratory ’s parent company , Google , score in a recentstudyreleased by Stanford researchers that ranks 10 major AI models on how openly they operate .

rat on 100 criterion , including whether its manufacturing business give away the source of its training data , information about the hardware it used , the working class involved in training and other details , PaLM 2 , one of Google ’s flagship text - analyzing AI models , scores a measly 40 % .

Join us at TechCrunch Sessions: AI

Exhibit at TechCrunch Sessions: AI

Now , DeepMind did n’t modernize PaLM 2 — at least not directly . But the lab has n’t historically been consistently transparent about its own models , and the fact that its parent fellowship fall unretentive on key transparency measure suggests that there ’s not much top - down pressure for DeepMind to do well .

On the other hired hand , in addition to its public musings about policy , DeepMind seems to be rent steps to transfer the perceptual experience that it ’s tight - lipped about its fashion model ’ computer architecture and inner working . The lab , along with OpenAI and Anthropic , committedseveral months ago to leave the U.K. government “ former or priority entree ” to its AI models to support enquiry into rating and prophylactic .

The question is , is this merely performative ? No one would accuse DeepMind of philanthropy , after all — the research laboratory rakes inhundreds of millionsof dollars in revenue each year , mainly by certify its oeuvre internally to Google teams .

Perhaps the lab ’s next big morality test isGemini , its approaching AI chatbot , which DeepMind CEO Demis Hassabis has repeatedly foretell will rival OpenAI ’s ChatGPT in its capabilities . Should DeepMind wish to be taken seriously on the AI ethics front , it ’ll have to to the full and exhaustively detail Gemini ’s weakness and limitation — not just its strengths . We ’ll certainly be watching closely to see how thing play out over the arrive months .

Here are some other AI stories of note from the past few days :

More machine learnings

car erudition mannikin are invariably leading to advances in the biologic sciences . AlphaFold and RoseTTAFold were good example of how a refractory problem ( protein folding ) could be , in essence , trivialized by the right AI manakin . Now David Baker ( creator of the latter model ) and his labmates have inflate the prediction operation to include more than just the anatomical structure of the relevant chains of amino group window pane . After all , protein be in a soup of other molecules and atoms , and predicting how they ’ll interact with isolated compounds or elements in the body is all-important to infer their actual shape and natural action . RoseTTAFold All - Atomis a braggart stride forward for feign biologic system .

Having a optic AI enhance science lab piece of work or play as a learning tool is also a great chance . The SmartEM project from MIT and Harvardput a computer visual modality arrangement and ML ascendence scheme inside a scanning electron microscope , which together ride the gimmick to examine a specimen intelligently . It can avoid area of low grandness , focalize on interesting or clear ones , and do smart labeling of the resulting image as well .

Using AI and other high technical school putz for archaeological purpose never gets quondam ( if you will ) for me . Whether it ’s lidarrevealing Mayan cities and highwaysor filling in the gaps ofincomplete ancient Grecian texts , it ’s always cool to see . And this reconstruction of a curl intellection destroy in the volcanic eruption that flush Pompeii is one of the most telling yet .

University of Nebraska - Lincoln CS student Luke Farritor trained a machine learning model to inflate the insidious radiation diagram on scans of the charred , rolled - up paper plant that are invisible to the bare oculus . His was one of many methods being attempt in an international challenge to read the scrolls , and it could be refined to do valuable pedantic work . Lots more info at Nature here . What was in the scroll , you ask ? So far , just the password “ purple ” — but even that has the papyrologists lose their mind .

Another academic triumph for AI is inthis system for vetting and suggesting citations on Wikipedia . Of course , the AI does n’t know what is true or factual , but it can gather from context what a high-pitched - quality Wikipedia article and quotation looks like , and scrape the land site and web for option . No one is suggesting we lease the robots start the famously user - driven on-line encyclopedia , but it could assist shore up up articles for which citations are lack or editor program are unsure .

Language models can be fine - tune up on many topics , and higher maths is surprisingly one of them . Llemma is a new open modeltrained on numerical proof and paper that can figure out fairly complex problems . It ’s not the first — Google Research ’s Minerva is working on similar capabilities — but its success on similar problem set and improved efficiency show that “ subject ” model ( for whatever the term is worth ) are competitive in this space . It ’s not desirable that sure types of AI should be dominated by private model , so replication of their capabilities in the surface is valuable even if it does n’t ruin new priming .

Troublingly , Meta is advance in its own academic workplace toward reading judgment — but as with most field of study in this orbit , the way it ’s portray rather oversells the process . In a paper called “ brainiac decoding : Toward literal - time Reconstruction Period of visual perception,”it may seem a bit like they ’re direct up reading minds .

But it ’s a little more collateral than that . By study what a high - absolute frequency psyche scan looks like when masses are bet at images of certain things , like horses or airplanes , the researchers are able to then do reconstruction in near veridical sentence of what they think the somebody is consider of or looking at . Still , it seems probable that generative AI has a part to play here in how it can create a visual expression of something even if it does n’t gibe like a shot to scans .

Shouldwe be using AI to take mass ’s minds , though , if it ever becomes possible ? enquire DeepMind — see above .

Last up , a project at LAION that ’s more aspirational than concrete mightily now , but laudable all the same . Multilingual Contrastive Learning for Audio Representation Acquisition , or CLARA , aims to give nomenclature models a better understanding of the nuances of human speech . You have it away how you may pick up on irony or a fib from sub - verbal signaling like tone or pronunciation ? Machines are pretty bad at that , which is regretful news for any human - AI interaction . CLARA utilize a subroutine library of audio recording and text in multiple languages to name some emotional states and other non - verbal “ speech apprehension ” cue .