Topics

Latest

AI

Amazon

Image Credits:Getty Images

Apps

Biotech & Health

clime

Image Credits:Getty Images

Cloud Computing

Commerce

Crypto

Image Credits:Lantern

Enterprise

EVs

Fintech

fund raise

Gadgets

Gaming

Government & Policy

Hardware

Layoffs

Media & Entertainment

Meta

Microsoft

seclusion

Robotics

security system

societal

Space

Startups

TikTok

Transportation

Venture

More from TechCrunch

Events

Startup Battlefield

StrictlyVC

newssheet

Podcasts

video

Partner Content

TechCrunch Brand Studio

Crunchboard

adjoin Us

The Tech Coalition , the group of tech company developing approaches and policy to battle online child intimate victimization and ill-treatment ( CSEA ) , today foretell the launching of a novel syllabus , Lantern , project to enable societal medium platforms to partake “ signal ” about activity and write up that might violate their policy against CSEA .

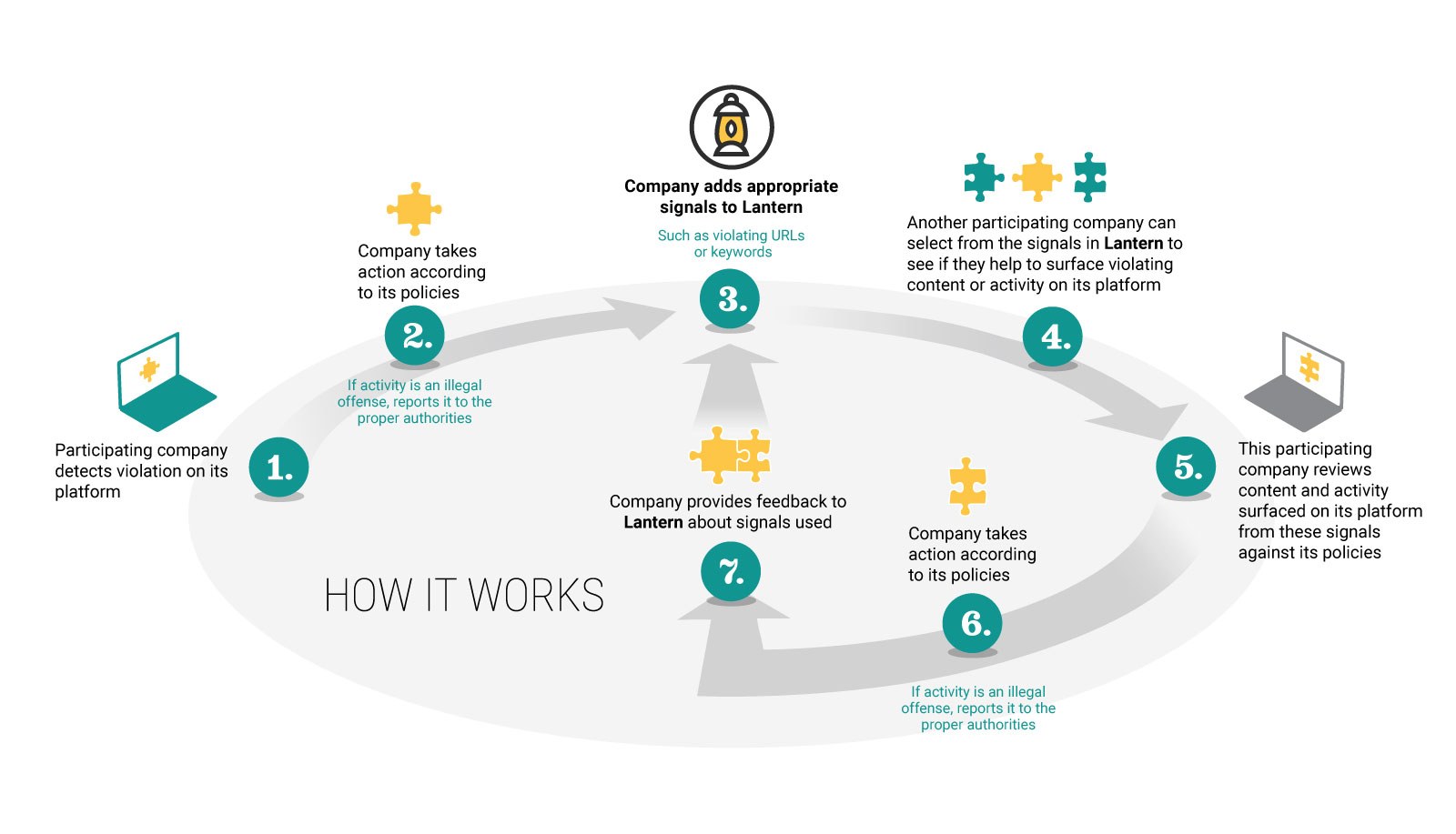

participate political platform in Lantern — which so far let in Discord , Google , Mega , Meta , Quora , Roblox , Snap and Twitch — can upload signal to Lantern about activeness that runs afoul of their damage , The Tech Coalitionexplains . signal can include selective information marry to policy - violating accounts , such as email savoir-faire and usernames , or keywords used togroomas well as buy and deal nipper intimate abuse material ( CSAM ) . Other participating platforms can then select from the signals available in Lantern , take to the woods the take sign against their platform , review any activity and content the signaling surface and take appropriate action .

The Tech Coalition is thrifty to note that these signal are n’t determinate proof of abuse . Rather , they propose only clue for follow - up investigations — and evidence to issue to the relevant office and law enforcement .

This being the case , signal upload to Lantern can be useful , seemingly . During a pilot program , The Tech Coalition , which claims that Lantern has been under ontogenesis for two days with veritable feedback from outside “ experts , ” sound out that the filing cabinet host service Mega share URL that Meta used to remove more than 10,000 Facebook profiles and pages and Instagram accounts .

After the initial group of company in the “ first stage ” of Lantern evaluate the program , extra participants will be welcomed to fall in , The Tech Coalition say .

“ Because [ child sexual ill-treatment ] dyad across platforms , in many cases , any one company can only see a fragment of the harm facing a victim . To uncover the full picture and take proper action , company demand to work together , ” The Tech Coalition wrote in a blog Charles William Post put out this morning . “ We are committed to include Lantern in the Tech Coalition ’s annual foil reputation and providing participating companies with recommendations on how to incorporate their participation in the program into their own transparentness reportage . ”

Despite disagreement on how to take on CSEA without stifling online privacy , there ’s concern about the growing breadth of child abuse material — both really anddeepfaked — now circularize online . In 2022 , the National Center for Missing and Exploited Children received more than 32 million report of CSAM .

Join us at TechCrunch Sessions: AI

Exhibit at TechCrunch Sessions: AI

A recent RAINN and YouGovsurveyfound that 82 % of parents believe the tech diligence , particularly societal media companies , should do more to protect child from sexual abuse and victimisation online . That ’s spurred lawgiver into action — albeit withmixedresults .

In September , the attorneys general in all 50 U.S. states , plus four territorial dominion , signedontoa lettercalling for Congress to take natural action against AI - enable CSAM . Meanwhile , the European Union hasproposedto authorisation that technical school company scan for CSAM while identifying and account groom bodily process targeting kids on their platforms .