Topics

Latest

AI

Amazon

Image Credits:MIT

Apps

Biotech & Health

mood

Image Credits:MIT

Cloud Computing

Department of Commerce

Crypto

Enterprise

EVs

Fintech

fund-raise

Gadgets

bet on

Government & Policy

Hardware

Layoffs

Media & Entertainment

Meta

Microsoft

Privacy

Robotics

Security

Social

blank space

startup

TikTok

Transportation

Venture

More from TechCrunch

Events

Startup Battlefield

StrictlyVC

Podcasts

telecasting

Partner Content

TechCrunch Brand Studio

Crunchboard

Contact Us

There are innumerous intellect why home robots have found little success post - Roomba . Pricing , practicality , form factor and mapping have all contributed to unsuccessful person after failure . Even when some or all of those are addressed , there remain the question of what happens when a system makes an inevitable mistake .

This has been a point of detrition on the industrial level , too , but big companies have the resources to address problem as they stand up . We ca n’t , however , await consumers to learn to program or hire someone who can assist any meter an event make it . Thankfully , this is a great enjoyment case for big language models ( LLMs ) in the robotics space , as instance by Modern research from MIT .

A studyset to be show at the International Conference on Learning Representations ( ICLR ) in May purport to fetch a flake of “ coarse sense ” into the process of correcting mistakes .

“ It grow out that golem are excellent mimics , ” the schoolhouse explains . “ But unless engine driver also programme them to adjust to every possible excrescence and nudge , robots do n’t needs know how to handle these situations , short of start their task from the top . ”

Traditionally , when a automaton encounters consequence , it will eat its pre - programmed option before command human intervention . This is a a fussy challenge in an unstructured environment like a domicile , where any number of changes to the status quo can adversely impact a robot ’s ability to go .

researcher behind the written report note that while imitation learning ( learning to do a task through observation ) is popular in the humanity of nursing home robotics , it often ca n’t calculate for the myriad minor environmental version that can interpose with regular mental process , thus requiring a system to restart from satisfying one . The unexampled research addresses this , in part , by breaking demonstrations into smaller subsets , rather than treating them as part of a continuous action .

This is where LLMs get into the picture , eliminating the requirement for the programmer to tag and delegate the legion subactions manually .

Join us at TechCrunch Sessions: AI

Exhibit at TechCrunch Sessions: AI

“ LLMs have a way to tell you how to do each step of a task , in born terminology . A human ’s uninterrupted manifestation is the embodiment of those steps , in forcible infinite , ” says grad student Tsun - Hsuan Wang . “ And we wanted to join the two , so that a robot would mechanically know what level it is in a job , and be capable to replan and recover on its own . ”

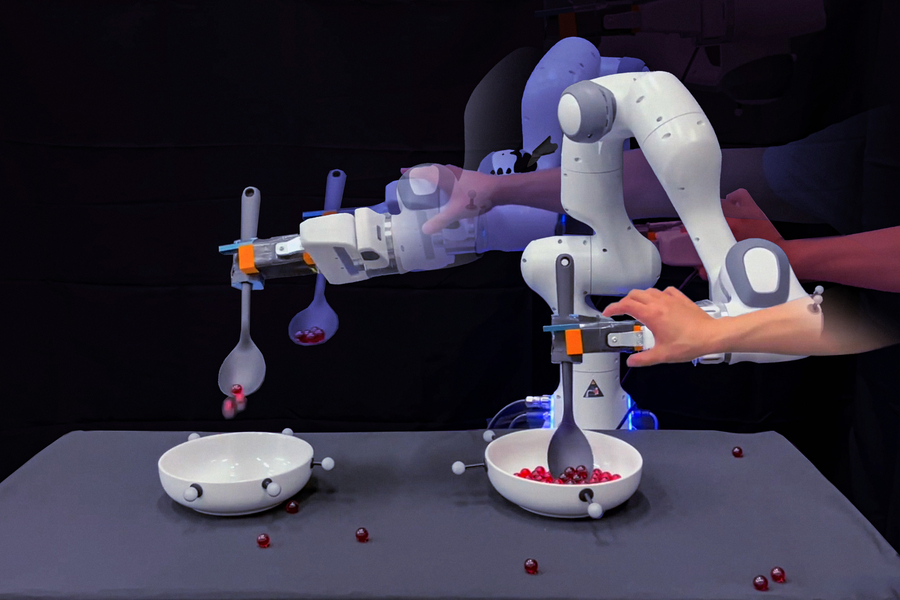

The finical manifestation featured in the study involves training a robot to outdo marble and pour out them into an empty bowl . It ’s a childlike , repeatable task for humans , but for robots , it ’s a combination of various small tasks . The LLMs are capable of listing and label these subtasks . In the demonstration , researchers sabotaged the activity in small ways , like chance the robot off course and knock marble out of its spoonful . The system responded by self - correcting the little tasks , rather than get going from scratch .

“ With our method acting , when the golem is making mistake , we do n’t require to postulate humans to programme or give superfluous demonstration of how to go back from failure , ” Wang tot .

It ’s a compelling method acting to help one forefend totally fall behind their marbles .