Topics

late

AI

Amazon

Image Credits:chepkoelena / Getty Images

Apps

Biotech & Health

Climate

Image Credits:chepkoelena / Getty Images

Cloud Computing

Department of Commerce

Crypto

Image Credits:Deepgram

Enterprise

EVs

Fintech

Fundraising

Gadgets

back

Government & Policy

Hardware

layoff

Media & Entertainment

Meta

Microsoft

privateness

Robotics

Security

Social

outer space

inauguration

TikTok

Transportation

speculation

More from TechCrunch

effect

Startup Battlefield

StrictlyVC

Podcasts

Videos

Partner Content

TechCrunch Brand Studio

Crunchboard

meet Us

Deepgramhas made a name for itself as one of the go - to startups for voice recognition . Today , thewell - fundedcompany announced the launch ofAura , its raw veridical - time text edition - to - speech API . Aura combine extremely naturalistic voice modelswith a low - latent period API to tolerate developers to build up tangible - time , colloquial AI agentive role . second by large language models ( LLMs ) , these agents can then stand in for client service federal agent in call centers and other customer - confront situations .

As Deepgram co - founder and CEO Scott Stephenson told me , it ’s long been potential to get access to great voice models , but those were expensive and took a long metre to compute . Meanwhile , low latency example incline to sound machinelike . Deepgram ’s Aura combines human - like voice models that render extremely fast ( typically in well under half a second ) and , as Stephenson noted repeatedly , does so at a low Leontyne Price .

“ Everybody now is like : ‘ hey , we need real - fourth dimension voice AI bot that can comprehend what is being said and that can understand and father a reply — and then they can speak back , ’ ” he say . In his view , it adopt a combination of accuracy ( which he described as tabular array stakes for a servicing like this ) , grim latency and acceptable cost to make a mathematical product like this worthwhile for businesses , peculiarly when combine with the comparatively high price of access LLMs .

Deepgram argues that Aura ’s pricing presently beats most all its challenger at $ 0.015 per 1,000 type . That ’s not all that far off Google ’s pricing for itsWaveNet voicesat 0.016 per 1,000 character and Amazon ’s Polly’sNeuralvoices at the same $ 0.016 per 1,000 eccentric , but — granted — it is cheaper . Amazon ’s high tier , though , is significantly more expensive .

“ You have to hit a really good price decimal point across all [ segment ] , but then you have to also have amazing latencies , swiftness — and then awesome truth as well . So it ’s a really hard thing to strike , ” Stephenson said about Deepgram ’s general feeler to building its product . “ But this is what we focused on from the first and this is why we built for four years before we released anything because we were build the underlying infrastructure to make that tangible . ”

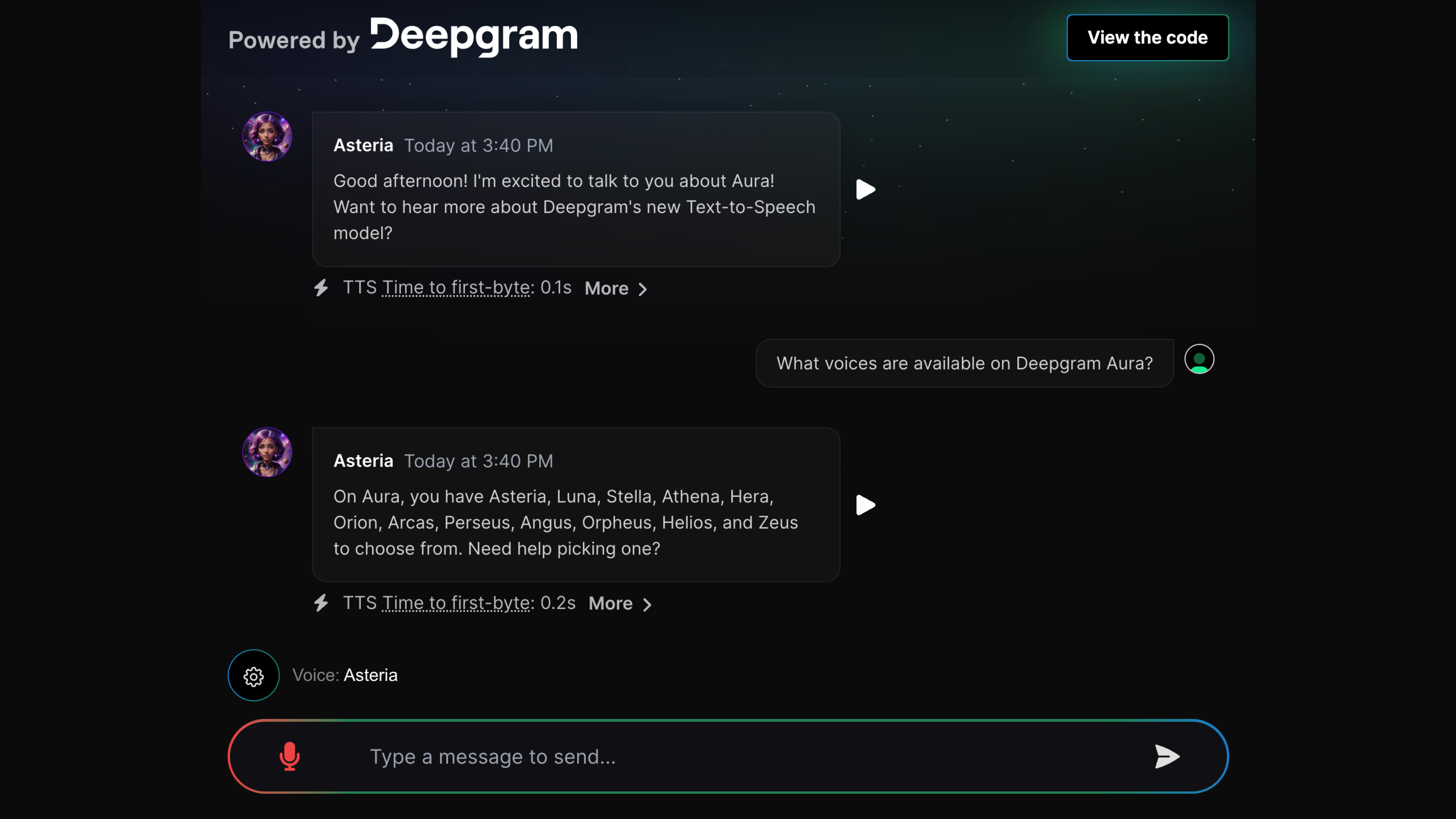

Aura offers arounda dozenvoice models at this point , all of which were coach by a dataset Deepgram created together with voice actors . The Aura example , just like all of the company ’s other model , were take in - house . Here is what that sounds like :

you could try a demonstration of Aurahere . I ’ve been prove it for a minute and even though you ’ll sometimes come across some odd pronunciations , the speed is really what stands out , in improver to Deepgram ’s survive in high spirits - quality speech - to - text edition mannikin . To play up the speed at which it generates response , Deepgram notes the time it look at the model to start talk ( in general less than 0.3 second ) and how long it direct the LLM to wind up generating its reply ( which is typically just under a second ) .

Join us at TechCrunch Sessions: AI

Exhibit at TechCrunch Sessions: AI

Deepgram shore new cash to originate its enterprisingness vocalization - acknowledgment business